AI a risk or a blessing?

The article: Is AI an Existential Risk (Rand, 2024), was a trigger to write this post.

Artificial Intelligence (AI) is nowadays a hype but is has already been there for a long time.

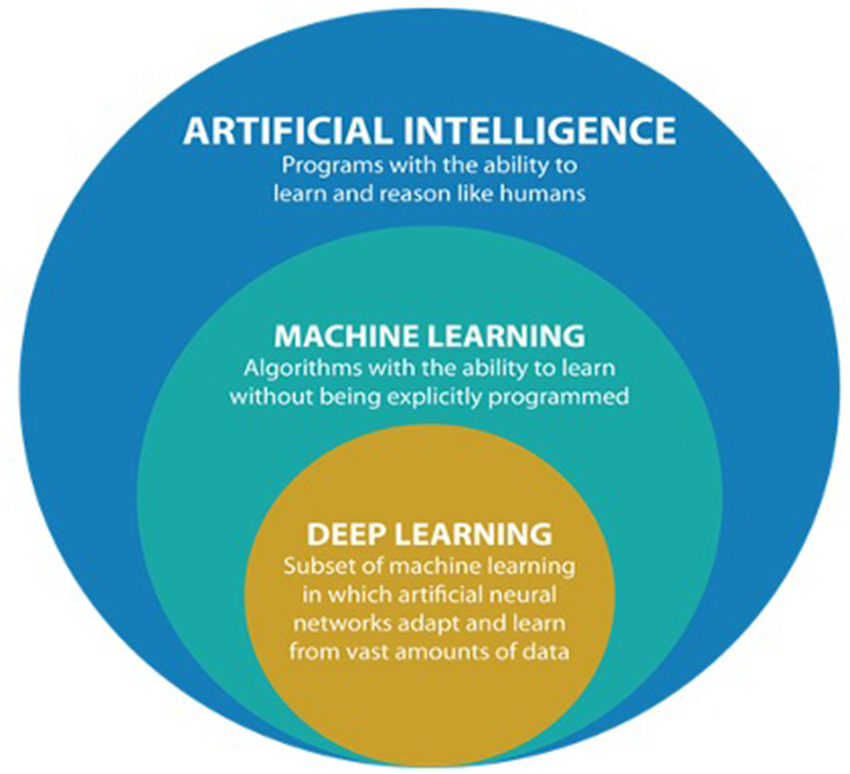

Let us first look at three definitions of AI:

1: It enables computers and machines to simulate human intelligence and problem-solving capabilities. (IBM, 2024)

2: A branch of computer science that focuses on things like robotics and machine learning, or programming computers to behave more like people. (Google, 2024)

3: More scientific: data based automated decision-making (under uncertainty) (Practicle Mathematics, 2023 First Edition)

Figure 1 Artificial Intelligence

In process control engineering many items could already be called AI for a long time. Because process control systems were already able to work in a “human intelligent” way. In steam boiler control, advanced control strategies were already used, e.g., to switch over feedwater pumps which needed maintenance. By means of sensor data and algorithms an automatic decision could then be taken to switch over to a redundant pump; this is called predictive maintenance. So, already a form of AI.

In fact, process control was a sort of forerunner for AI. Advanced control was necessary to assist the operator and to take over manual control in complex processes, hazardous environments and for 24 hours availability of a safe process .

Nowadays AI can be implemented in many more areas because of increased computer speed and increased internet availability and speed and by knowledge acquired by process control techniques.

So, is AI a risk or a blessing?

In many areas AI will be a blessing. With available data it will e.g., be possible to detect diseases and develop autonomous car driving.

It may get tricky when using AI neural networks, so called deep learning, see fig.1.

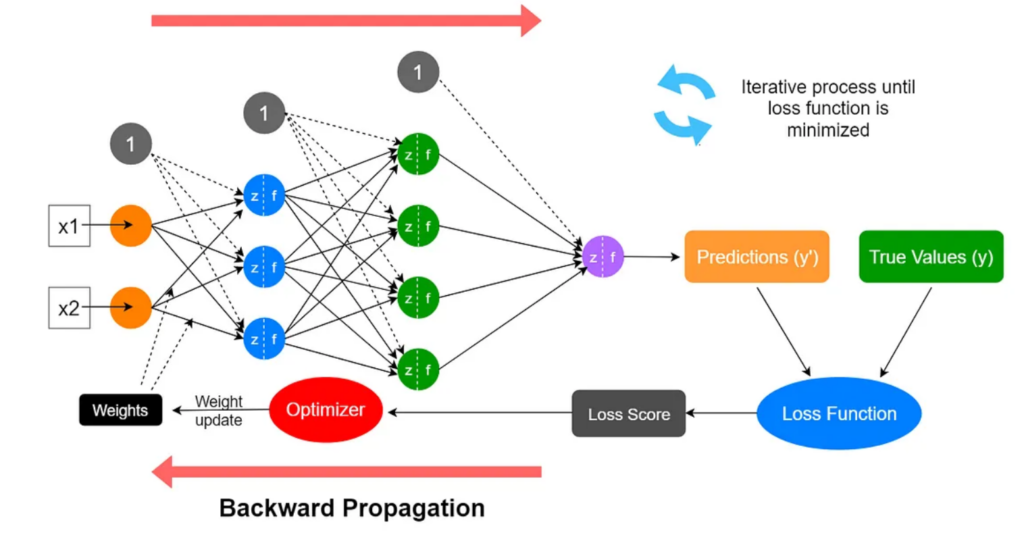

Figure 2 Neural Network

This deep learning AI system, see fig.2, uses interconnected nodes, or neurons, in a layered structure that resembles the human brain. When these systems receive data feedback (backward propagation) it is possible to optimize the neural network system. So, this creates an adaptive system that learns from its mistakes and hence improves continuously. So, it can: identify phenomena, weigh options, make conclusions and take decisions in an autonomous way. It is like human beings that learn by correcting its mistakes.

The nodes and interconnections are implemented by means of mathematical algorithms which are programmed by means of PC’s with powerful processors.

These neural networks may have some negative impacts:

- It is possible that AI controlled systems get “too” autonomous. Which increases its intelligence and hence its capabilities in an uncontrollable way.

- The mathematical algorithms are quite complex and can only be made by experts. So, it will be possible to misuse these algorithms and moreover, this can be hard to detect.

- Wrong data implemented, deliberately or not, in the commissioning phase may cause a malfunctioning, hazardous and uncontrollable system.

- Receiving malicious data in the operation phase may change the original system in an uncontrollable way.

The realist school says the world is anarchic and war is often inevitable. Governments may try to contain the development of autonomous AI for warfare, but there will always be persons or organizations that will misuse these AI systems and it may be possible that autonomous systems may itself cause wars.

Moreover, as so often happened in history, the war itself will enhance the capabilities of technology. The development of V1 missiles and atomic weapons during WW2 is a good example. Currently, the war in Ukraine shows the same pattern: development of drones and signal jamming systems is going extremely fast; a new (AI) kind of warfare is introduced. An extra acceleration.

And jamming systems that prevent remote control of drones may even lead to a proliferation of autonomous AI systems.

Unrealistic. No! In process automation within 30 years there was a same development: from pneumatic controls systems to advanced autonomous microprocessor-controlled systems. In many cases the operator can only observe the process and cannot control it.

Conclusion:

- Autonomous AI systems may or rather will take over the future battlefield.

- There will be more proxy wars because anonymous AI systems can easily be used by third parties like NSA’s.

- AI systems may get uncontrollable and may even cause wars. The only winner may be the AI controlled system itself.

- In this case there may be one positive point: the struggle with AI controlled systems can unit people because there is one common (AI) enemy. And the enemy of my enemy is my friend…..

- When delivering limited and safe data and not using feedback the neural network may be contained.

Question:

Has (intelligent) human species not made the same development as currently AI systems? Human species has become able to master animals and nature. By creating an AI copy of ourselves we may have done the worst….

Martin van Heun

Senior process control engineer

References:

Google. (2024). Retrieved from Google: www.google.com

IBM. (2024). Retrieved from IBM: www.ibm.com

Practicle Mathematics. (2023 First Edition). In Ghosh. BPB Publications.

Rand. (2024, March 11). Is AI an existential risk? Q&A with Rand Experts. Rand.